TL;DR

AI already underpins drug discovery and protein folding; in quantum materials, it lags for one reason: lack of experimental data for training AI models.

AI is transforming how we do science across domains. Yet for quantum materials – which could enable loss-free power transmission, room temperature quantum computers, intrinsically secure communications, and medical sensors thousands of times more sensitive than today's – AI remains hamstrung. The culprit is our reliance on theoretical simulations instead of real-world experiments: no simulation fully captures how quantum particles interact in complex materials, and even our largest supercomputers cannot predict emergent behaviour with actionable accuracy.

The core issue is straightforward: we lack a curated, high-quality experimental dataset. Without a rigorously standardised, richly annotated body of measurements, AI models make predictions disconnected from physical reality, impeding scientific progress and technological deployment. To close this gap, we must build a comprehensive experimental dataset that consolidates data across diverse materials classes, captured under standardised protocols, and structured in a unified “data language” (ontology) that both humans and AI can understand. Only with this foundation, machine learning models will be able to make trustworthy predictions about material properties, guide experiments, and accelerate discovery at scale, with the accuracy required for practical deployment.

To demonstrate feasibility with speed and efficiency, we propose a national initiative centred on building an open, AI-ready experimental data ecosystem for quantum materials.

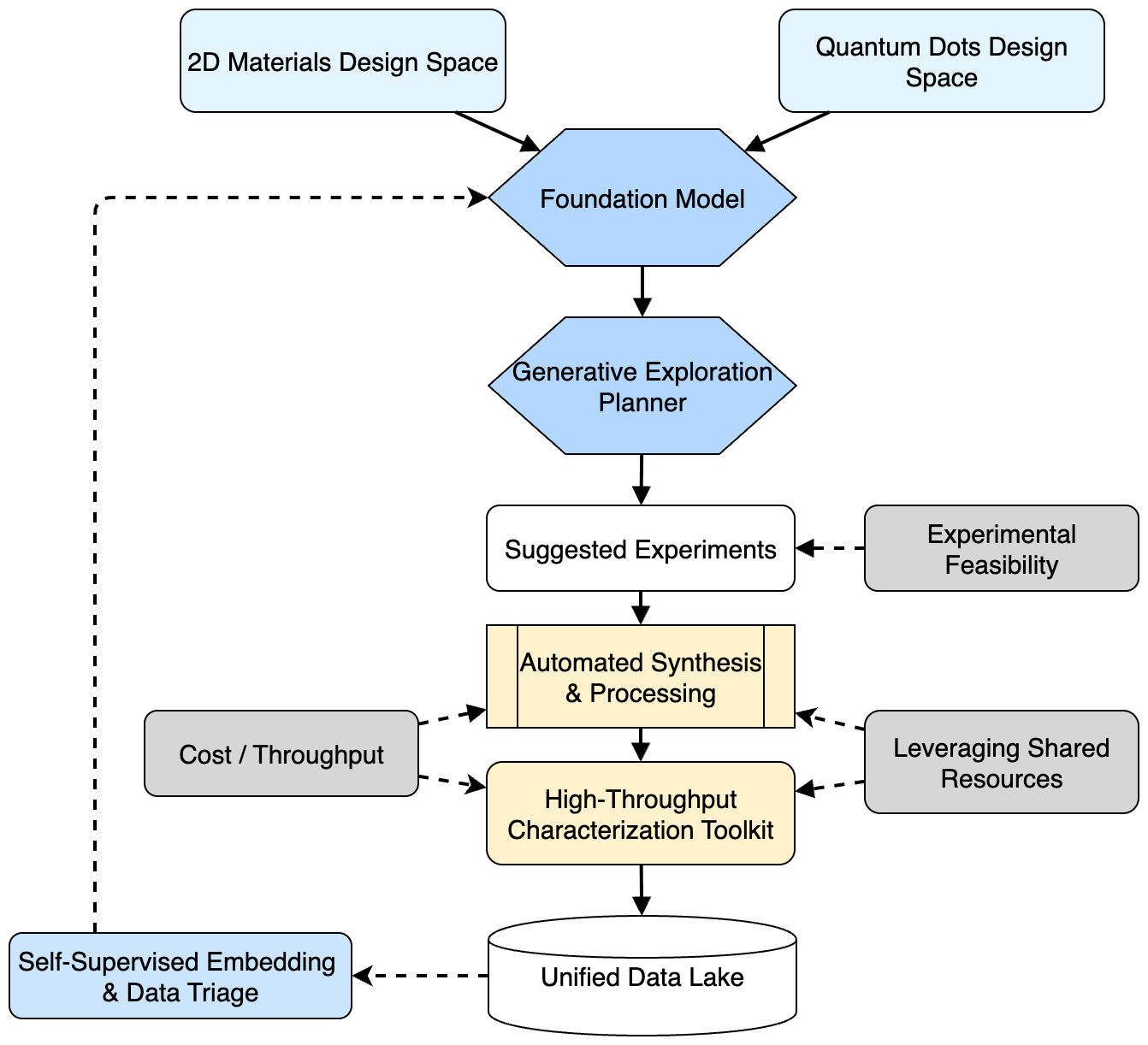

In its initial phase, this effort could target two complementary material classes: two-dimensional (2D) crystals and zero-dimensional (0D) quantum dots (QDs). Both systems owe their remarkable properties to quantum confinement at the nanometre scale – a shared feature that allows a unified, automated discovery pipeline to accelerate breakthroughs across both domains. The pilot will demonstrate cost‑effective data generation at scale and create an expandable framework for broader quantum materials.

The UK is uniquely positioned for this effort: it combines world-leading expertise in graphene and 2D materials with a sovereign, cadmium-free QD supply chain and wide national research infrastructure capable of rapid, large-scale measurements.

For the UK, the impact is substantial. This initiative will:

Accelerate innovation in clean energy, advanced electronics, quantum computing, and national security – cutting development cycles by 10×-100×.

Drive economic growth by lowering R&D costs and spawning high‑value materials industries, generating multi-billion-pound opportunities.

Establish the UK as a global leader at the intersection of AI, data infrastructure, and quantum technology, creating a first-mover advantage that attracts talent, investment, and strategic partnerships.

Datasets are the foundation of future research and technology in a world fuelled by AI – the new raw material that major nations are already stockpiling as strategic reserves. By making a targeted investment now, the UK can turn its scientific strengths into those very reserves, converting them into durable technological leadership while simultaneously capturing a significant share of the emerging quantum economy.

Introduction

Quantum materials – where quantum physics dictates extraordinary properties – are the foundation for technologies of enormous potential. These properties, such as superconductivity permitting lossless electrical flow, offer capabilities that conventional materials cannot match1. Yet today’s most widely used materials at the technological frontier – the century-old silicon for logic or aluminium for qubits – are nearly at their limits, which remain to be the focus of increasingly incremental and costly research. To unlock step-change advances, we must shift toward new classes of materials. Quantum materials could address this challenge: they are inherently complex, rich in functionality, and capable of enabling next-generation breakthroughs. The strategic development of quantum materials promises to transform industries and tackle critical global challenges, from sustainable energy production to advanced computing systems2.

Quantum materials come in diverse forms – from two-dimensional atomic layers to zero-dimensional quantum dots – each exemplifying unique quantum confinement effects. For example, graphene (a single layer of carbon atoms) has exceptional electrical conductivity because it hosts massless Dirac fermions, enabling ultra-fast electronics and new quantum devices. On the other end, semiconductor quantum dots – sometimes called “artificial atoms” – have electronic structures that can be finely tuned simply by changing their size, resulting in precisely controlled light emission for high-efficiency displays, medical imaging, and quantum communication.3 Despite their different geometries, both 2D materials and quantum dots owe their extraordinary properties to quantum effects emerging at the nanometre scale, a unifying principle that allows us to study and accelerate breakthroughs in both classes using shared experimental and AI-driven approaches.

This white paper outlines how quantum materials can power next-generation technologies and why accelerating their discovery with AI is both urgent and essential4.

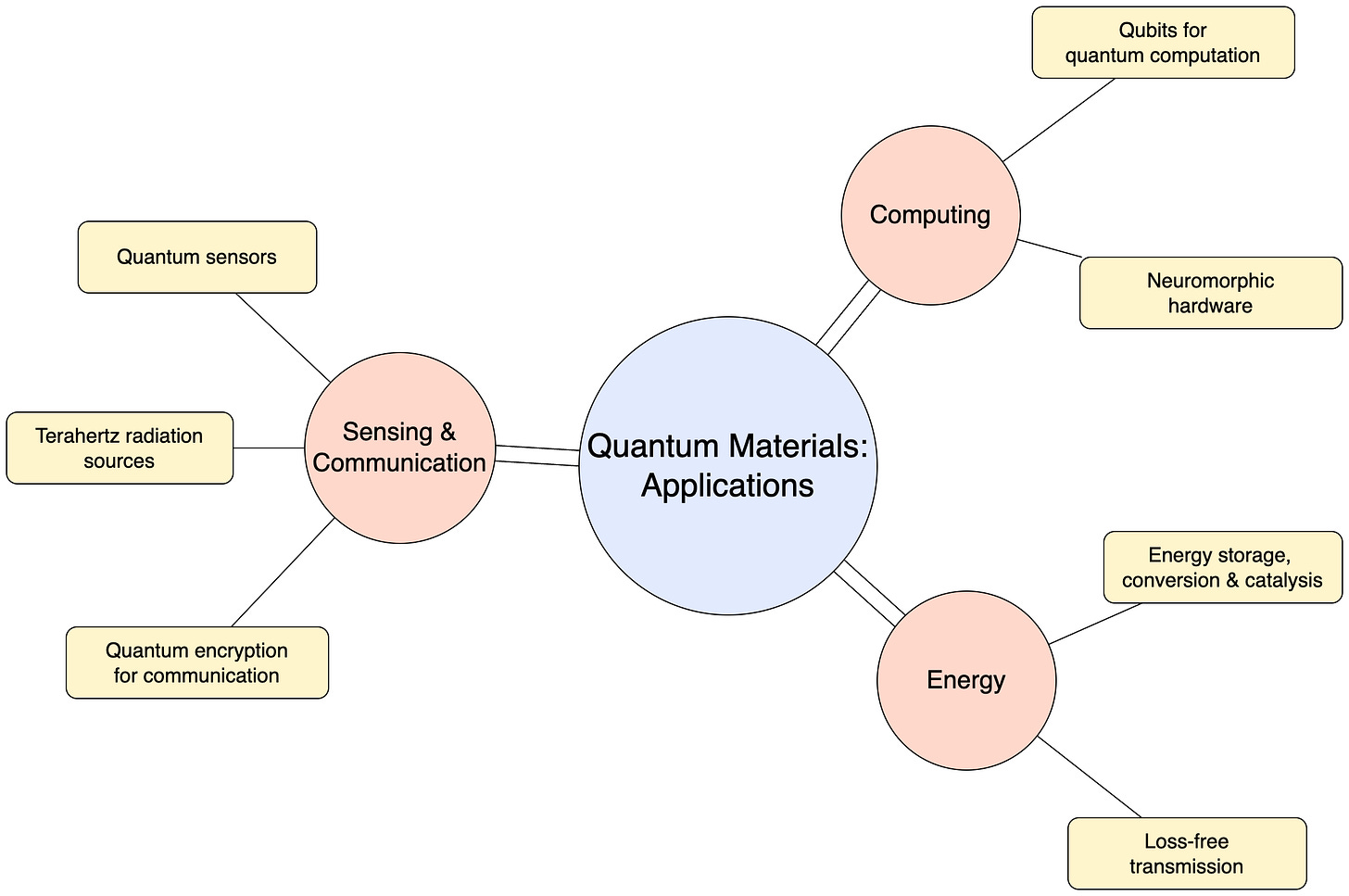

Transformative technologies enabled by quantum materials

Quantum materials are “hardware accelerators” for physics – transforming subtle quantum effects into powerful real-world functionalities that have the potential to revolutionise entire industries. Their impact falls into three strategic domains that align with UK industrial strengths and societal needs.

Quantum information and ultra‑efficient AI hardware

Quantum materials provide the building blocks for the upcoming revolutions in computing – in both fault‑tolerant quantum processors and brain‑inspired neuromorphic chips.

Scalable, stable qubits for quantum computing. Quantum processors promise to solve problems that defeat classical machines, but only if their qubits preserve coherence long enough to complete complex calculations. Oxford Economics estimates that achieving this could lift UK productivity by 7% by 2045, equivalent to £212 billion in additional gross value added5. 2D platforms – such as few-layer graphene, topological insulators, or superconducting transition-metal dichalcogenides – already demonstrate suppressed noise and extended coherence6,7. Quantum dots offer a complementary, CMOS‑compatible path: isotopically pure Si/SiGe spin qubits now reach >99 % fidelity with 200 µs coherence at 1 K, while InAs dot molecules sustain microsecond hole‑spin coherence under all‑optical control8,9,10. Looking further ahead, topological qubits built on Majorana modes promise intrinsic resistance to decoherence and could unlock truly fault-tolerant architectures11. Together these advances in quantum supply chain outline a realistic path to practical quantum computers, capable of revolutionising cryptography, drug discovery and complex-system optimisation – fields that underpin many of the UK’s high‑value industries.

Neuromorphic hardware for ultra-efficient AI: AI’s accelerating energy demands threaten to overwhelm national infrastructure, with data centres alone projected to consume over 20 TWh annually in the UK by the early 2030s – reaching 10% of the nation’s total electricity use, equivalent to the output of several large power stations. In contrast, the human brain operates at just ~20 W while performing > 1015 synaptic operations per second – a benchmark of energy efficiency that conventional silicon-based computing overshoots by multiple orders of magnitude12,13. 2D materials already provide memristor, memcapacitor and memtransistor elements that switch below 100 zeptojoules per operation, nearing the thermal limit at room temperature (kBT ≈ 4 zJ)14,15,16. Zero‑dimensional quantum dots extend the palette to optoelectronic synapses: QD devices have demonstrated multispectral, light‑gated plasticity with femtojoule training energy and nanosecond response, enabling retina‑like pre‑processing directly on chip17,18. A recent roadmap notes that gradient‑trained spiking networks running on emerging neuromorphic elements are becoming “off‑the‑shelf” for commercial adoption – a tipping point reminiscent of early GPUs in deep learning19,20. Quantum-material-enabled devices could lead to neuromorphic chips that process data in parallel with minimal power, potentially achieving over 106× greater energy efficiency than current silicon-based CMOS architecture21,22. If deployed widely, such systems could reduce AI-related power consumption in the UK by up to 10 TWh annually by 2040, saving over £2 billion per year in electricity costs and significantly cutting carbon emissions. They would also enable edge-AI applications – like wearable health monitoring and real-time disease diagnostics – to run on microwatts of power, extending battery life by 10-100× and unlocking ultra-low-energy intelligent systems at scale23,24.

Quantum sensing, imaging and secure communications

Quantum materials can amplify the tiniest magnetic, electrical, or optical signals into clear, measurable outputs – unlocking a new generation of sensors, imaging devices, and secure communications.

Quantum sensors for healthcare: Quantum materials can enable sensors to revolutionise healthcare diagnostics and monitoring25. For example, nitrogen-vacancy centres in diamond power ultra-sensitive magnetometers capable of detecting faint magnetic fields with sensitivities better than 1 pT⋅(Hz)-1/2 – 100× better than portable alternatives and rivalling cryogenic SQUID systems, in terms of practicality (portability, cost)26. Quantum sensors27,28 can map brain activity (100 fT to 1 pT) at 1 mm resolution, and enable advanced heart monitoring, detecting 10-100 pT fields at a distance of 1 cm from the chest29. When paired with graphene or other 2D materials, which expands their sensitivity to electric fields, these devices could achieve near-real-time imaging (1-10 frames/second) of neuronal and cardiac activity down to cellular processes like calcium signalling at 100 nm resolution30,31,32,33. Quantum-enhanced assays can also detect cancer biomarkers, such as PSA, at < 1 pg/mL in serum – 100× more sensitive than current assays – potentially increasing early cancer detection rates and boosting patient survival34,35,36. Quantum dots extend the spectrum: room‑temperature InP/ZnSe QD photodiodes now deliver multispectral IR detection for tissue imaging, while cadmium‑free QDs from UK foundries (e.g, Nanoco Group plc) have enabled safe, in‑vivo tracking of tumours37. QDs are also used in flat-panel X-ray scintillators and high-colour-gamut micro-LED displays, linking advances in healthcare, consumer electronics, and industrial inspection. By 2040, portable quantum sensors costing under £1,000 could become standard NHS diagnostic tools, supporting earlier and more accurate detection of conditions such as cardiac arrhythmias and cancer. With over 600,000 people living with epilepsy, > 1.6 million with atrial fibrillation, and nearly 400,000 new cancer cases diagnosed annually in the UK, quantum sensors could greatly improve outcomes through faster diagnosis and personalised treatment planning – especially for hard-to-detect cases like prostate or pancreatic cancer38,39,40,41,42.

Portable sources of tunable coherent terahertz radiation: Terahertz radiation, ranging from 0.1 to 10 THz (wavelengths of 0.03 to 3 mm) between microwaves and infrared, holds enormous potential for breakthroughs in imaging, communications, and spectroscopy. However, compact and tunable THz sources remain elusive. Quantum materials such as Dirac semimetals (like graphene), topological insulators, or layered superconductors present a promising approach with their unique electronic structures that allow precise control over electron dynamics and enable the development of miniature, coherent THz emitters43,44,45. In the UK, this innovation is highly relevant to industry: THz systems are already used for non-invasive inspection in the pharmaceutical sector (e.g., quality control of tablet coatings46), for semiconductor fault detection, and in security screening to identify concealed threats. THz device development is also a priority for national institutions such as the National Physical Laboratory47. Portable, quantum-material-based THz emitters could be transformative for UK industries, enabling real-time imaging of defects in materials, enhanced food and pharmaceutical safety checks, next-generation wireless communications, and advanced national security capabilities.

Quantum secure communications: As cyber threats escalate, the UK faces growing vulnerabilities: businesses lose an average of £3.4 million per data breach48, the National Cyber Security Centre (NCSC) responds to over 400 incidents each year – nearly 100 of which are nationally significant49 – and cybercrime causes an estimated £30 billion in annual economic loss50. Moreover, future quantum computers could render current encryption obsolete – dubbed the “quantum apocalypse” – a risk so serious that NCSC has outlined a timeline for migrating to quantum-resistant encryption by 203551,52. Quantum materials enable sovereign quantum key distribution (QKD) systems – a fundamentally new defence that uses quantum mechanics to make any eavesdropping instantly detectable – identified by the UK Quantum Communications Hub as critical for securing critical national infrastructure53. Specifically, materials like QDs, photonic crystals, 2D materials, or rare-earth-doped solids enable compact, efficient entangled-photon sources, critical for QKD systems54,55,56,57. These could integrate into fibre-optic systems or even satellites58, ensuring secure data transfer for governments, banks, and individuals. The result is a communications infrastructure where security is fundamentally guaranteed by the laws of physics – not just mathematical algorithms – thanks to the unique properties of quantum materials52.

Clean energy and resilient infrastructure

Achieving ambitious energy and climate goals requires materials that can transport electricity and ions with minimal energy loss – and convert or harvest light and chemical energy far more efficiently than current technologies. Quantum materials can unlock those order‑of‑magnitude gains.

Energy storage, conversion, and catalysis. The energy sector could see a quantum leap with these materials59. For energy storage, 2D MXenes and perovskite-based quantum materials enable batteries with higher energy density and faster charging through enhanced ionic conductivity60,61 – critical for meeting the UK’s projected need for 23-27 GW of battery storage and 4-6 GW of long-duration energy storage by 203062. For energy conversion, quantum dots boost solar cell efficiency by capturing broader spectrum of sunlight63,64, aligning with the UK’s ambition to deploy up to 47 GW of solar PV capacity as part of its clean power transition62. For sustainable catalysis, metal-organic frameworks and other designer quantum materials improve reaction selectivity and efficiency in key processes like hydrogen evolution and CO2 reduction65,66,67. These breakthroughs address both the £44 billion cost burden from recent fossil fuel price volatility and the need for a resilient, low-carbon infrastructure68. With £40 billion per year of clean energy investment expected through 2030, quantum-material-enabled technologies could play a vital role in building an energy system that lowers emissions, supports energy independence, and powers a sustainable industrial future for the UK62.

Loss‑free transmission and superconducting devices: Superconductivity – the complete absence of electrical resistance and expulsion of magnetic fields – typically requires extreme cooling (often well below liquid nitrogen, -196°C), limiting its widespread use. Engineered layered quantum materials like pnictides, cuprates, or nickelates, hint at the possibility of higher transition temperatures69,70,71,72,73. Even incremental increases in transition temperature would yield substantial energy efficiency benefits and enhance sustainability by reducing cooling system requirements74. Room temperature superconductivity (RTS) would be a globally transformative breakthrough – it will revolutionise power and transport: enabling perfectly efficient lossless electrical grids, frictionless magnetic levitation (e.g. high-speed trains), and ultra-fast superconducting electronics – fundamentally reshaping how we generate, transmit, and use energy. Such a breakthrough aligns directly with the UK’s net-zero 2050 strategy and energy security goals, as a superconducting grid would sharply cut carbon emissions and energy waste domestically. By leading the global race toward RTS, the UK could achieve a monumental national scientific milestone, as well as secure first-mover advantage in one of the most consequential technological frontiers of the century.

Need for AI and data-driven discovery

Identifying and optimising new quantum materials is a vast search problem. The space of elemental combinations, structures, and processing conditions is essentially infinite, and quantum phenomena are highly sensitive to subtle material parameters. Traditional trial-and-error experimentation cannot keep pace with this complexity – exhaustive exploration is infeasible as each added element or processing variable explodes the number of possible experiments exponentially. Most quantum properties emerge from complex interactions, not directly evident from crystal structures, hence structure-property prediction is obscured. Furthermore, sophisticated experiments (like single crystal growth, synchrotron X-ray, or high-resolution transmission electron microscopy) are costly and time-consuming, so obtaining each data point (each candidate material’s performance) is a costly investment. These challenges threaten to impede innovation in quantum materials, as the “sparsity” of optimal materials and the complexity of their processing-structure-property relationships make serendipitous discovery increasingly unlikely.

Data-centric AI-driven approach offers a timely solution. By combining high-throughput experiments, computational modelling, and machine learning (ML), researchers can navigate the immense design space more efficiently. Instead of manually testing a handful of materials, automated synthesis and characterisation platforms can produce and screen hundreds of thousands of samples, guided by AI algorithms that in turn learn from each result. Crucially, keeping experimental validation “in the loop” ensures that ML models remain grounded in reality – predictions are promptly tested, and the new data points refine the model. This iterative cycle accelerates learning and helps correct model biases or errors, dramatically improving the ability to predict promising quantum materials75.

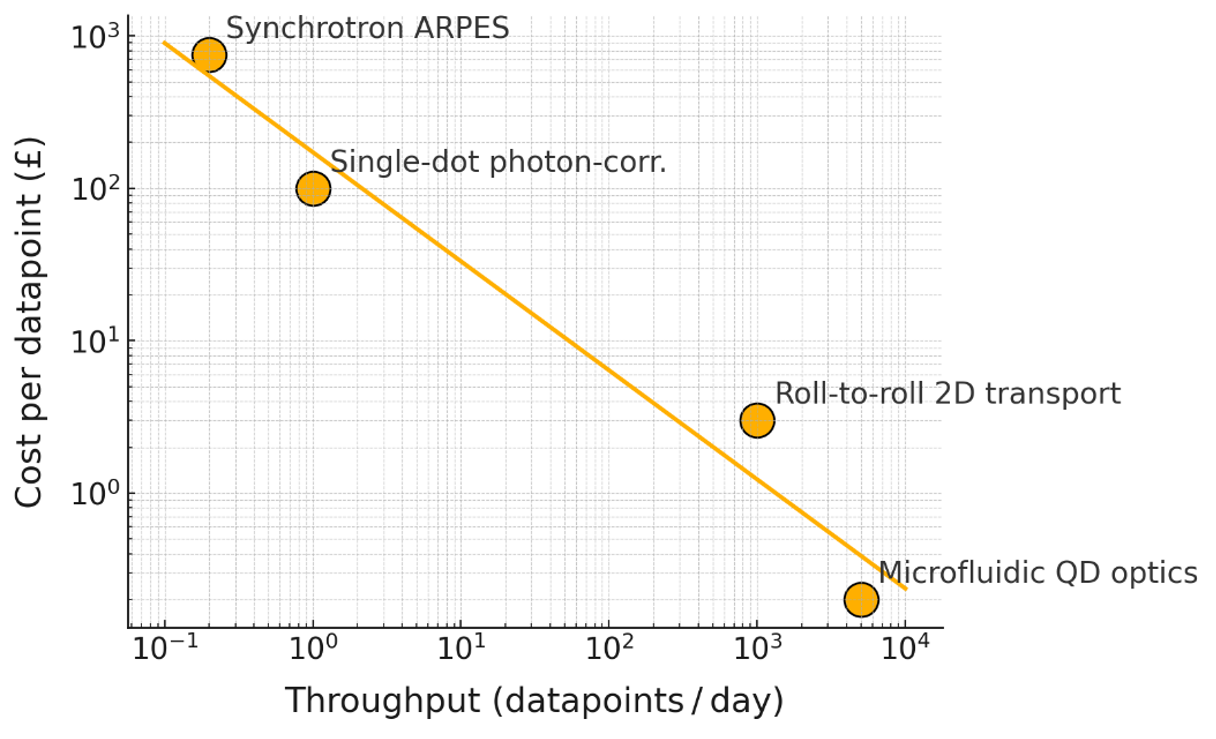

Likewise, the adoption of AI would also drive dramatic cost reductions per experiment. To realise large-scale discovery, the research community must achieve a 10×-100× reduction in cost per data point76. Achieving this goal demands rigorous benchmarks and experimental techniques that are far more efficient than today’s norms. Parallelised measurements, miniaturised devices, and standardised test protocols can drive costs down. With automation and digital workflows redesigning the modern lab, our analysis estimates that throughput improvements of two to three orders of magnitude are attainable, along with proportionate cost reductions per sample. By embracing these approaches, we can generate massive, diverse datasets that data-driven models require, without incurring prohibitive costs. Integration of AI with large-scale, low-cost experimental data generation is now indispensable – it will allow us to explore ideas that would be impossible via traditional means, ultimately unlocking quantum materials breakthroughs at an unprecedented rate.

Current state of AI-driven materials discovery

Artificial intelligence is already transforming biology, medicine, and chemistry. In protein folding, for instance, AlphaFold has revolutionised the prediction of complex 3D structures of proteins from amino acid sequences with unprecedented accuracy, slashing years off research timelines77. In drug discovery, AI models sift through vast chemical libraries to identify promising compounds, guiding experimental efforts toward viable candidates for diseases like cancer or Alzheimer’s. In chemistry, AI has accelerated the design of catalysts, such as those for sustainable fuel production, by predicting reaction pathways and optimising molecular structures for efficiency, often reducing the need for exhaustive experimental trials78,79. These successes depend on AI's capacity to extract meaningful patterns from large, high-quality, experimentally validated datasets.

In materials science, AI is beginning to show similar promise. Machine learning models can predict properties like conductivity or strength based on atomic structures, while generative algorithms propose novel material compositions for testing. High-throughput computational tools, paired with AI, have already sped up the screening of alloys and semiconductors. However, quantum materials research lags behind these advances. The field relies heavily on theoretical models and simulated datasets, such as those from density functional theory (DFT), rather than robust experimental data. While these computational approaches provide valuable insights, they frequently struggle to capture the full complexity of quantum phenomena, like superconductivity or topological behaviour, which emerge from collective many-body interactions that are challenging to model accurately. It is not uncommon for simulations to be very different from the actual material performance. And without experimental grounding, AI predictions risk being speculative or overly generalised, to put it mildly. This dependence on incomplete or idealised data limits our ability to discover and optimise quantum materials with the precision and speed seen in other domains.

Need for an experimental dataset

Recent successes outside quantum materials – polymer dielectrics, high-entropy alloys, battery electrolytes – show that when deep learning meets rich experimental data, materials discovery cycles compress from years to months80,81,82,83. To unlock AI’s full potential in quantum materials discovery, a large-scale, experimentally derived dataset is essential. Unlike theoretical or simulated data, experimental datasets capture the real-world complexity of material synthesis, processing, and performance – critical factors that directly determine quantum properties. AI models thrive on large, diverse, and well-annotated data to generate accurate predictions and design new materials. Only experimental results provide the necessary ground truth to train these models, refine their outputs, and ensure their predictions are physically meaningful. By integrating real experimental measurements – from X-ray diffraction, spectroscopy, and electron microscopy to transport and magnetometry – AI can learn the nuanced relationships between structure, synthesis conditions, and quantum behaviour. This data-centric approach is the key to navigating the vast, intricate design space of quantum materials, where theoretical models often fail to capture emergent properties.

Yet the datasets available today fall far short of this vision. Most are computationally generated, lacking the scale, standardisation, and real-world relevance required for transformative progress. While small, fragmented experimental datasets exist, they are tailored to specific studies, making them incompatible for broad AI applications84,85. They lack uniformity in measurement techniques or metadata, hindering integration and comparison. Moreover, these datasets do not capture the necessary range of variables – precise growth/synthesis conditions, concentration of defects, or doping levels – that influence and often dominate quantum properties. This sparsity and inconsistency of datasets severely limits AI’s effectiveness, leaving current models incomplete and unreliable. Existing platforms such as the NREL HTEM85 database are restricted not only by their narrow property coverage and identification solely by stoichiometry, but also critically by their lack of detailed structural context. Without explicit structure-property links, these datasets cannot reliably generalise predictions beyond the measured compositions. Moreover, existing datasets rarely report negative results, significantly restricting AI’s ability to employ active learning methods, which rely on both successful and unsuccessful examples to refine predictions. While semiconductor and deep-tech companies often possess internal experimental datasets, these are typically proprietary, small-scale, poorly designed, narrowly application-specific, and lack standardisation and clear criteria for data collection and storage – making them currently unsuitable for broader scientific AI training.

Approach to the experimental dataset

AlphaFold77 succeeded because the Protein Data Bank already covered most of the ‘fold-space’86,87. Materials science lacks such analogous experimental landscape comprehensively linking crystal structures to properties! We call for an initiative to create this landscape – starting with the tractable world of 2D crystals and quantum dots. Like protein fold-space, many inorganic compounds form a limited set of crystallites (perovskite, spinel, rocksalt, etc.)88. Two-dimensional materials shrink the materials space further: only 80 layer groups versus 230 bulk space groups89. Exploring experimentally this smaller landscape is practical – computational surveys list thousands of 2D candidates90,91,92, but fewer than 100 are verified experimentally so far – hence, exploring this space offers “low-hanging fruits” for quantum materials discovery.

Why a unified approach? 2D materials and quantum dots represent complementary extremes of quantum confinement. Treating them in a single AI‑driven loop offers three key advantages: 1. Transferable insights from QDs to 2D materials, and vice versa; 2. Covering a wide materials spectrum expanding from 2D to 2.5D (multilayer heterostructures), to 1D (quantum channels) and 0D (quantum dots, qubits); 3. The UK has a leading strength in both 2D materials and QDs R&D. The expected result is a discovery engine that delivers more breakthroughs, faster, with the same experimental budget.

To move toward a “Nobel-Turing moment”93 in quantum materials discovery, the development of a large-scale experimental dataset must be guided by two core principles: alignment with advanced AI training objectives and practical experimental and economic feasibility. This dual focus ensures that the dataset is not only scientifically meaningful but also scalable and implementable within real-world constraints.

Alignment with AI training goals

The dataset must be purpose-built to train state-of-the-art AI models. This means prioritising data that directly support the prediction and design of key quantum material properties, such as critical temperature in superconductors or dielectric function in photonic materials. Careful choice of parameters will allow AI to navigate complex, high-dimensional materials spaces and generate actionable insights.

The dataset must offer rich and diverse experimental records that capture the relationships between material composition, structure, processing conditions, and observed quantum behaviour. Priority should be given to experiments that yield high-value data points for learning, exploration, and generalisation. Additionally, the inclusion of both positive and negative results is critical for improving model robustness and enabling active learning strategies, where AI models guide data collection for maximum impact.

Broadening the scope of materials in the dataset is also key to robust AI training. Exposing AI models to multiple quantum material families (2D crystals and 0D nanocrystals) will encourage cross-domain generalisation. An ML model trained on such a wide spectrum, from 2D-bound electron systems to 0D quantum-confined states, can learn more universal representations of quantum phenomena, making its predictions more reliable across new, unseen materials. In practice, combining disparate but complementary material classes expands the range of properties and patterns the AI can learn. For instance, a model ingesting both the electronic transport data of 2D materials and the photoluminescence spectra of semiconductor QDs would develop a richer understanding of how quantum confinement and dimensionality affect material behaviour. This approach directly supports the goal of producing generalisable AI models that aren’t limited to one niche of quantum materials but can transfer insights across domains. It also helps bridge data modalities – linking, say, an optical response dataset (common for QDs) with an electronic properties dataset (common for 2D materials) – which can improve AI’s ability to correlate different types of measurements. A dataset spanning both 2D materials and QDs will better align with advanced AI training objectives by providing the breadth and variety needed for robust, transferrable learning.

Experimental and economic feasibility

Experimental feasibility is a non-negotiable prerequisite. Any proposed experiment must be practical and achievable with current or near-future technology and resources. This involves assessing the availability of equipment, the complexity of procedures, and the time required for setup and execution. Feasibility also means choosing experiments that can be replicated across most labs, avoiding those requiring exotic conditions or rare resources. This foundational step filters out impractical methods, ensuring that only viable options proceed to further evaluation.

Once alignment and feasibility are established, maximising throughput becomes critical. Throughput refers to the number of data points generated per unit time, essential for building a large-scale dataset efficiently. High throughput can be achieved through automation, such as automated synthesis platforms and parallelised measurement techniques, which process multiple samples simultaneously. Prioritising throughput ensures that the dataset grows rapidly, enabling AI models to learn from a large, diverse set of experimental results, crucial for handling the complexity of quantum materials. For instance, implementing high-throughput characterisation systems can screen hundreds of thousands of samples, significantly speeding up data collection – throughput improvements of one to three orders of magnitude are attainable, which directly reduces the cost per data point76.

Quantum dot experiments exemplify these feasibility and throughput principles. QDs can be synthesised in solution at bench scale, using standard chemical methods that are readily automated. In fact, the bottom-up synthesis of QDs is highly adaptable to microfluidic and parallel approaches, meaning hundreds of reactions can be run and monitored in parallel with minimal human intervention94. Automated, closed-loop setups can produce and characterise libraries of QD samples at speeds unattainable by conventional solid-state materials growth95,96. Characterisation techniques for QDs (photoluminescence, absorption spectra, quantum yield measurements) are likewise fast and can be multiplexed – for example, an optical scanner can record emission spectra from hundreds of quantum dots in minutes. Crucially, these solution-based processes do not require expensive or specialised infrastructure like MBE chambers or synchrotrons; they run at atmospheric pressure and relatively low temperatures. This drastically lowers per-sample cost. Materials like perovskite QDs can even be deposited by simple methods such as spin-coating or spraying, eliminating the need for slow, costly single-crystal growth64.

Further minimising the cost per data point is vital for the project's economic viability, especially given the scale required. Cost per data point is calculated as the total cost (including setup, operation, and resource use) divided by the number of data points collected. To reduce this, strategies include using standardised protocols to ensure consistency and efficiency, leveraging economies of scale, and reusing resources. For example, miniaturising devices and parallelising measurements can lower operational costs, while partnering with industry and government funding can offset initial investments. For instance, hundreds of QD compositions or core-shell structures can be synthesised and tested in the time it might take to grow and characterise a single complex oxide film. The cost per data point drops further when using techniques like combinatorial QD synthesis on microchips or pipette robots preparing many samples in parallel. This means our dataset can expand rapidly without prohibitive cost. Hence, QDs offer a feasible, high-throughput experimental pipeline: they can be made and measured with relatively standard lab resources at scale, and integrated into automated workflows as fast as possible – reinforcing the economic viability of the overall project.

Choosing high-leverage experiments: Identify techniques that efficiently target features important to the AI models - like ARPES for band structure or magnetotransport for carrier dynamics - and prioritise them by how much useful information they yield per pound spent. Illustrative examples:

2D films. Wafer-scale or roll-to-roll spatial atomic layer deposition (SALD) with off-line Raman/PL spectral mapping or multiplexed cryogenic transport would offer over 1,000 datapoints per day at low cost. Synchrotron ARPES, in contrast, returns only one band structure a week at significantly higer cost. While both target composition-dependent electronic properties, the SALD route supplies two to three orders of magnitude more cost‑normalised information.

Quantum‑dot optics. Array of microfluidic reactors vary precursor ratios on the fly and stream droplets through an in-line spectrometer, recording peak wavelength and quantum yield for thousands of formulations per day at a fraction of a pound. Cryogenic photon‑correlation spectroscopy on individual dots offers richer exciton‑lifetime data, but tops out at ~1-2 dots per day and ~£100 per measurement.

Guiding principle: Feed the model with low-cost, high-throughput data until its uncertainty plateaus; then bring in the slower, expensive probes only for sparse deep investigation.Our estimations show the cost per datapoint scales as throughput-0.72: a ten-fold rise in daily datapoints slashes cost five-fold. This predicted scaling matches the best self-driving labs reported in recent reviews97,98,99. By prioritising such high-throughput, automatable experiments (in QDs and 2D materials alike), we can ensure that large volumes of quality data can be generated within a pragmatic budget. Throughput improvements on the order of 102–103 translate directly into cost-per-data-point reductions, making the creation of a massive experimental quantum materials dataset economically feasible. Meanwhile, any trade-offs, e.g. an ultra-high-value but low-throughput measurement, can be balanced by the overwhelming volume of significantly faster data. The approach is inherently multi-objective: we can strategically blend experiment types to maximise learning while respecting real-world constraints on time and funding.

The UK already possesses world-class hardware for quantum‑materials research, Diamond Light Source synchrotron, the National Physical Laboratory (NPL) quantum metrology suits, and cleanroom fabs at Manchester, Cambridge, UCL, and elsewhere. What it lacks is a single, mission‑driven team that can weave those assets into a high‑throughput, AI‑ready discovery engine. To drive this initiative, a purpose‑built research institution or a Focused Research Organisation100 (FRO) would bridge the existing gap, tasked exclusively with producing standardised, industrial-scale experimental datasets of national strategic importance.

This FRO-style initiative will act as a compact “engine room” inside the national research estate. It would purchase only the components the wider system does not already provide – modular synthesis and characterisation racks, real‑time data servers, and the AI workflow that steers them, while leasing high‑capex capabilities from Diamond, NPL, or university labs through service agreements. Measurements from external instruments would stream into the cloud via secure channels and be annotated automatically by a shared ontology, ensuring that every record is machine‑readable.

A multidisciplinary team of engineers, chemists, physicists, and data scientists would work toward milestones set around cost-per-datapoint, throughput, and reproducibility targets; facility scientists employed at their home institutions would serve as embedded consultants, guaranteeing best-in-class calibration without duplicating billion-pound infrastructure. Because every proposed experiment must clear gates on feasibility, throughput, and cost, each datapoint generated is cheap, repeatable, and useful for AI. The governance model echoes successful UK precedents such as the Rosalind Franklin Institute and the Battery Industrialisation Centre, combining lean management with national credibility.

A pilot centred on thin-film deposition of 2D materials, their heterostructures, and solution‑phase QD synthesis will be able to prove the feasibility of the dataset generation. Within three to five years, the initiative could raise national datapoint generation by three orders of magnitude, harnessing UK facilities yet moving with start‑up agility. Like a Formula 1 design shop that rents wind‑tunnel time rather than building its own, this national initiative would turn dispersed excellence into a coordinated, high‑throughput discovery machine, delivering a public, industrial‑grade dataset on the timescale demanded by the global race for AI‑accelerated quantum‑materials discovery.

The discovery platform forms a closed-loop AI cycle: a self-supervised model-based reinforcement learning engine and hierarchical generative planner iteratively suggest experiments, and the most informative measurements provide feedback to refine the model. By injecting feasibility, cost, and facility availability constraints at the decision points shown, the loop remains grounded in real-world laboratory throughput and budget limits.

Standardisation of the dataset

AI can only learn effectively if every experiment speaks the same “data language”. Whether it is a thin‑film ALD growth log, sheet resistivity, or QD emission spectrum, each record must follow one coherent syntax. The first task, therefore, is to impose a single ontology – a formal dictionary of all the concepts that matter in quantum materials research and the relationships that link them. An ontology plays the role that grammar plays in language: it tells both humans and machines exactly what a term such as “carrier density” or “thermal budget” means, what units it must carry, and how it connects to other ideas such as “mobility” or “substrate temperature”. Ontology creates a shared vocabulary and schema, enabling consistent data annotation, streamlined querying, and better interoperability across research teams101,102,103,104. With this foundation in place, datasets become accessible, usable, and impactful, allowing AI models to deliver meaningful insights and drive innovation in quantum materials.

With an ontology in place, every data entry – an XRD pattern of a 2D material or a photoluminescence spectrum of a QD – are tagged with standardised descriptors. This consistency enables direct comparisons of properties across thousands of samples and straightforward merging of different datasets. It also aids automated data cleaning and anomaly detection; the ontology defines expected relations, against which outliers can be flagged. For example, if a QD emission wavelength is recorded but its core size is missing, ontology-based rules would highlight that inconsistency for resolution. Over time, as the dataset grows, this standardisation will support knowledge graph generation, where connections across experiments (and across QD and 2D domains) are mapped and queried. It transforms raw data into structured knowledge that AI and human researchers can navigate.

A domain-specific ontology is a foundational layer for structuring data allowing to develop a robust and AI-ready experimental dataset for quantum materials. This approach ensures that key components – such as material composition, synthesis conditions, structural parameters, and electronic or magnetic properties – are consistently defined and interoperable across research efforts. By aligning dataset design with ontological standards, we enable traceability, reduce ambiguity, and support integration with external knowledge systems. Importantly, this strategy balances the technical rigor needed for ML with the practical realities of experimental throughput and cost, allowing for scalable data collection and annotation without overburdening research workflows. In doing so, it lays the groundwork for training AI models that are not only predictive, but also interpretable and grounded in physical insight.

There are three major benefits of using ontology for dataset standardisation. First, the labour required to curate the database grows sub‑linearly: once the rules are in place, adding a hundred thousand new points is little more effort than adding a thousand. Second, a federated “UK materials graph” becomes possible, because other research centres, industries, and universities can append their results automatically to the dataset. Third, the AI model receives clean, richly annotated inputs, raising both its predictive accuracy and interpretability.

Key prediction and design tasks enabled by the dataset

The proposed dataset equips AI with two linked capabilities that have so far eluded purely computational approaches. First, it supports reliable prediction of emergent properties – for example, superconducting transition temperature, topological band order, or exciton lifetime – by training models on measured, not simulated, behaviour. Second, it enables inverse design, in which an algorithm works backward from a target property to suggest the material compositions and processing conditions most likely to achieve it. Grounding both tasks in high-quality experimental data lets the model learn many-body correlations that ab initio calculations miss, then exploit that insight to explore the vast, quantum‑dominated design space with speed and confidence. Together, these twin capabilities attack the field’s central bottleneck: finding viable candidates in a search space too large for trial‑and‑error and too complex for theory alone.

Prediction of quantum properties

Training on a large, well‑labelled body of experiments will let AI models predict quantum properties that have so far eluded first‑principles calculations. From routine descriptors – composition, substrate, growth temperature, and baseline structural data, the models will be able to infer properties including superconducting transition temperature, topological characteristics, strongly correlated electronic phases, quantum transport behaviour, optical responses in quantum dots, and novel phenomena in 2D–QD hybrids. Accurate predictions of these properties are essential to developing transformative quantum technologies, from superconductors and quantum computers to next-generation sensors and ultra-efficient AI hardware.

Materials design and optimisation

Once AI models trained on our dataset can accurately predict quantum properties, we can reverse the workflow: starting from a desired property, the model will suggest optimal synthesis protocols to achieve it. This inverse design mode turns the search for new materials into an optimisation problem that the AI can tackle directly, rapidly guiding the development of complex materials such as tailored van der Waals heterostructures, advanced quantum-dot emitters, robust quantum sensors, and economically viable quantum hardware. Inverse design replaces slow, trial-and-error experimentation with targeted, high-impact studies, accelerating innovation toward market-ready quantum technologies.

Required data types and collection methodology

To support these AI tasks, the dataset must integrate complementary data categories while prioritising high-throughput, cost-effective experimental approaches. This requires strategic choices on which measurements provide the highest information density per unit cost:

Structural and chemical characterisation data will be collected primarily through automated, high-throughput techniques – like grazing-incidence X-ray diffraction maps, Raman/photoluminescence maps, spectroscopic ellipsometry – that can in principle process 103-104 samples daily. Intermediate sampling bandwidth covers scanning electron microscopy with energy dispersive spectroscopy and scanning probe microscopy. While other specialised techniques like neutron scattering and high-resolution transmission electron microscopy provide valuable insights, they will only be used for representative samples, optimising the cost-information balance.

Electronic, magnetic, and optical measurements will employ scalable, parallelised screening approaches. For instance, multiplexed transport measurements and optical spectroscopy can rapidly assess thousands of materials, while resource-intensive techniques like ARPES or SQUID magnetometry will be strategically deployed only for promising candidates identified through initial screening.

Synthesis and processing metadata will be captured through automated synthesis platforms, ensuring comprehensive documentation of processing-structure-property relationships without increasing experimental overhead. Ontology and standardised reporting formats will facilitate automated extraction and integration.

Measurement context parameters will be recorded using consistent protocols across all experiments, enabling meaningful cross-comparison without requiring additional measurements.

This tiered approach – combining broad, high-throughput screening with selective in-depth characterisation – aligns with the emphasis on dramatically reducing the cost per data point. By implementing parallelised measurements, standardised protocols, and automation, we can achieve the 100-1000´ cost reduction identified as necessary for large-scale discovery while maintaining data quality and comprehensiveness.

Data collection will follow the experimental feasibility and economic viability principles outlined earlier, with priority given to techniques that balance throughput, cost-effectiveness, and information density. Collection pathways will include:

Strategic high-throughput experiments, designed specifically for this initiative, prioritising methods with favourable throughput-to-cost ratios. This would comprise the majority of the datapoints.

Systematic extraction from published literature using advanced text mining and data extraction tools, with both manual validation by domain experts, and developing agentic AI pathways to accelerate this process. This allows for early training of active learning models even before the high-throughput experimental pipeline is fully deployed.

Integration with existing materials databases (e.g., Materials Project105, ICSD106) through standardised APIs and data transformation pipelines. This allows integration into recognised computational and structural databases.

Partnerships with industrial and academic laboratories to incorporate previously unpublished experimental results, including valuable negative outcomes that rarely appear in the literature. This also benefits the translation of the outcomes to industry.

The ontological framework described in the standardisation section will unify these diverse data sources, ensuring seamless integration, traceability, and interoperability, creating a dataset that is both comprehensive and machine-actionable.

By aligning our data collection strategy with both the experimental feasibility considerations and the specific requirements of advanced AI models, we ensure the resulting dataset will drive meaningful progress in quantum materials discovery, directly addressing the limitations outlined in earlier sections.

Closing the validation loop – At regular milestones (e.g. every 10 k new datapoints) we will freeze the dataset and publicly run a blinded benchmark: we will invite to train Model A on the combined experimental + simulated corpus and Model B on simulations alone, both of them will then be scored against a held-out set of fresh, unpublished measurements. Accuracy uplift, top-k discovery yield, and cost-per-successful-candidate will be reported on a public leaderboard. This rolling audit quantifies the dataset’s value while the project is live, long before the final tranche of data is collected, and lets the community iterate on both models and measurement strategies in real time.

Urgency and transformational impact

A standardised, ontology-based experimental dataset is the missing link between today’s quantum materials experiments and tomorrow’s breakthrough technologies. Anchoring AI models in real measurements, rather than idealised simulations, will shrink discovery cycles from years to days and open routes that conventional theory cannot chart. Immediate targets include raising superconducting transition temperatures toward ambient conditions, tailoring topological phases for fault-tolerant qubits and energy-preserving neuromorphic chips, and engineering quantum-enabled catalysts for green hydrogen, CO₂ reduction, and low-pressure ammonia synthesis. Each goal depends on navigating a design space that is too large for trial-and-error yet tractable for AI trained on a coherent, richly annotated corpus.

The timing is critical. Deep-learning architectures now handle structured scientific data; automated synthesis and screening platforms reach the required throughput; and the UK already owns world-class hardware – Diamond Light Source, NPL, the Royce Institute hubs – and a fast-growing AI sector. What is still missing is the grammar that binds disparate measurements into one machine-readable language, and the dedicated team that produces fresh experimental data at industrial cadence. Establishing those pieces now lets the UK write the standards others will follow; waiting risks importing someone else’s framework – and relying on their intellectual properties – with it.

Benefits to the UK

Investing in this dataset positions the UK as the global leader in AI-driven materials discovery, perfectly aligned with the AI Opportunities Action Plan and the National Quantum Technologies Programme. Rapid, cost-effective identification and validation of high-performance compounds will accelerate R&D in electronics, aerospace, clean energy, and pharmaceuticals, fuelling start-ups and high-skill jobs. New materials that are lighter, more efficient, and longer-lasting will advance net-zero goals – from better batteries and hydrogen catalysts to low-loss power hardware.

Most importantly, the dataset becomes a compounding national asset. Each additional experiment – whether from this initiative, a university partner, or an industrial collaborator – slots into the shared ontology and boosts the predictive power of the AI models. As machine-learning methods evolve, historic entries are re-mined for fresh insights at negligible cost. The result is a self-reinforcing engine for scientific discovery and industrial innovation that keeps the UK at the forefront of the global knowledge economy.

Challenges and solutions

Creating a national, AI-ready quantum materials dataset is a five-dimensional puzzle – data quality, cost, community buy-in, technical skills, and governance all have to click. Our solution is a quantum discovery initiative that opens with two high-leverage platforms – 2D materials and quantum dots (QDs). Both can be produced and measured at scale, and together they span the full range of quantum confinement physics107. The initiative will quickly generate proof-of-concept data, refine workflows before larger investment, and supply the sustained coordination needed to feed curated experiments straight into AI models. Below, we summarise key challenges in quantum materials discovery and outline how the proposed initiative addresses them.

By converting each risk into a quantifiable KPI and wiring those KPIs into our initiative’s charter, the programme turns what could be an open-ended research effort into an industrial-grade delivery engine for the UK.

Call to action

We are experiencing a transformative moment for science, technology, and the UK currently stands at a strategic crossroads. With global momentum building around AI and quantum technologies, the ability to lead will depend not only on talent and ambition, but on data infrastructure that turns scientific insight into machine-actionable knowledge to fuel quantum technologies. The time to act is now – before other nations solidify their dominance in this transformative field.

We urge the UK Government to launch a national effort to build an experimental dataset for quantum materials, starting with a tightly scoped pilot on 2D materials and quantum dots. This initiative will demonstrate feasibility, deliver early wins in materials discovery, and establish the foundational data architecture for future AI breakthroughs.

By acting decisively, the UK can seize global leadership in AI-powered materials science. This initiative will catalyse innovation across quantum and clean energy sectors, create a long-term national asset that supports national priorities in sustainability and economic resilience, and reinforces the UK’s technological sovereignty.

Acknowledgements

The author would like to thank Thomas Kalil, Thomas Westgarth, Sergei V. Kalinin, Anupam Bhattacharya, Maksym Plakhotnyuk, Andrey Ustyuzhanin, and Qian Yang for insightful and inspiring discussions.

References

Goyal, R. K., Maharaj, S., Kumar, P. & Chandrasekhar, M. Exploring quantum materials and applications: a review. Journal of Materials Science: Materials in Engineering 20, 1-38 (2025). https://doi.org:10.1186/s40712-024-00202-7

Du, Y., Chou, S. & Li, R. W. Developing Advanced Quantum Materials is Key to Promoting Science and Technology. Adv Sci 11, e2407326 (2024). https://doi.org:10.1002/advs.202407326

Garcia de Arquer, F. P. et al. Semiconductor quantum dots: Technological progress and future challenges. Science 373 (2021). https://doi.org:10.1126/science.aaz8541

Future directions for materials for quantum technologies report - Materials for Quantum Network, https://m4qn.org/news/future-directions-report (2024).

Ensuring that the UK can capture the benefits of quantum computing. https://www.oxfordeconomics.com/resource/ensuring-that-the-uk-can-capture-the-benefits-of-quantum-computing/ (2025).

Garreis, R. et al. Long-lived valley states in bilayer graphene quantum dots. Nature Phys. 20, 428-434 (2024). https://doi.org:10.1038/s41567-023-02334-7

Denisov, A. O. et al. Spin-valley protected Kramers pair in bilayer graphene. Nat Nanotechnol, 1-6 (2025). https://doi.org:10.1038/s41565-025-01858-8

Huang, J. Y. et al. High-fidelity spin qubit operation and algorithmic initialization above 1 K. Nature 627, 772-777 (2024). https://doi.org:10.1038/s41586-024-07160-2

Neyens, S. et al. Probing single electrons across 300-mm spin qubit wafers. Nature 629, 80-85 (2024). https://doi.org:10.1038/s41586-024-07275-6

Lin, A., Doty, M. F. & Bryant, G. W. Incorporation of random alloy GaBixAs1−x barriers in InAs quantum dot molecules: Alloy strain, orbital effects, and enhanced tunneling. Phys. Rev. B 109 (2024). https://doi.org:10.1103/PhysRevB.109.165303

Lutchyn, R. M. et al. Majorana zero modes in superconductor–semiconductor heterostructures. Nature Reviews Materials 3, 52 (2018). https://doi.org:doi:10.1038/s41578-018-0003-1

Liu, Y. et al. Cryogenic in-memory computing using magnetic topological insulators. Nat Mater, 1-6 (2025). https://doi.org:10.1038/s41563-024-02088-4

Assi, D. S. et al. Quantum Topological Neuristors for Advanced Neuromorphic Intelligent Systems. Adv Sci (Weinh) 10, e2300791 (2023). https://doi.org:10.1002/advs.202300791

Zhou, B. T., Pathak, V. & Franz, M. Quantum-Geometric Origin of Out-of-Plane Stacking Ferroelectricity. Phys Rev Lett 132, 196801 (2024). https://doi.org:10.1103/PhysRevLett.132.196801

Chen, S. et al. Wafer-scale integration of two-dimensional materials in high-density memristive crossbar arrays for artificial neural networks. Nature Electronics 3, 638-645 (2020). https://doi.org:10.1038/s41928-020-00473-w

Nirmal, K. A., Kumbhar, D. D., Kesavan, A. V., Dongale, T. D. & Kim, T. G. Advancements in 2D layered material memristors: unleashing their potential beyond memory. npj 2D Materials and Applications 8, 1-27 (2024). https://doi.org:10.1038/s41699-024-00522-4

Lv, Z. et al. Semiconductor Quantum Dots for Memories and Neuromorphic Computing Systems. Chem Rev 120, 3941-4006 (2020). https://doi.org:10.1021/acs.chemrev.9b00730

Balamur, R. et al. A Retina-Inspired Optoelectronic Synapse Using Quantum Dots for Neuromorphic Photostimulation of Neurons. Adv Sci (Weinh) 11, e2401753 (2024). https://doi.org:10.1002/advs.202401753

Muir, D. R. & Sheik, S. The road to commercial success for neuromorphic technologies. Nat Commun 16, 3586 (2025). https://doi.org:10.1038/s41467-025-57352-1

Lanza, M. et al. The growing memristor industry. Nature 640, 613-622 (2025). https://doi.org:10.1038/s41586-025-08733-5

Sangwan, V. K. & Hersam, M. C. Neuromorphic nanoelectronic materials. Nat Nanotechnol 15, 517-528 (2020). https://doi.org:10.1038/s41565-020-0647-z

Pal, A. et al. An ultra energy-efficient hardware platform for neuromorphic computing enabled by 2D-TMD tunnel-FETs. Nat Commun 15, 3392 (2024). https://doi.org:10.1038/s41467-024-46397-3

Hoffmann, A. et al. Quantum materials for energy-efficient neuromorphic computing: Opportunities and challenges. APL Materials 10 (2022). https://doi.org:10.1063/5.0094205

Sebastian, A., Le Gallo, M., Khaddam-Aljameh, R. & Eleftheriou, E. Memory devices and applications for in-memory computing. Nat Nanotechnol 15, 529-544 (2020). https://doi.org:10.1038/s41565-020-0655-z

Bongs, K., Bennett, S. & Lohmann, A. Quantum sensors will start a revolution - if we deploy them right. Nature 617, 672-675 (2023). https://doi.org:10.1038/d41586-023-01663-0

Fescenko, I. et al. Diamond magnetometer enhanced by ferrite flux concentrators. Phys Rev Res 2 (2020). https://doi.org:10.1103/physrevresearch.2.023394

Budakian, R. et al. Roadmap on nanoscale magnetic resonance imaging. Nanotechnology 35 (2024). https://doi.org:10.1088/1361-6528/ad4b23

Fang, H. H., Wang, X. J., Marie, X. & Sun, H. B. Quantum sensing with optically accessible spin defects in van der Waals layered materials. Light Sci Appl 13, 303 (2024). https://doi.org:10.1038/s41377-024-01630-y

Sekiguchi, N. et al. Diamond quantum magnetometer with dc sensitivity of sub-10 pT Hz-1/2 toward measurement of biomagnetic field. Physical Review Applied 21 (2024). https://doi.org:10.1103/PhysRevApplied.21.064010

Qiu, Z., Hamo, A., Vool, U., Zhou, T. X. & Yacoby, A. Nanoscale electric field imaging with an ambient scanning quantum sensor microscope. npj Quantum Information 8, 1-7 (2022). https://doi.org:10.1038/s41534-022-00622-3

Garcia-Cortadella, R. et al. Graphene active sensor arrays for long-term and wireless mapping of wide frequency band epicortical brain activity. Nat Commun 12, 211 (2021). https://doi.org:10.1038/s41467-020-20546-w

Bonaccini Calia, A. et al. Full-bandwidth electrophysiology of seizures and epileptiform activity enabled by flexible graphene microtransistor depth neural probes. Nat Nanotechnol 17, 301-309 (2022). https://doi.org:10.1038/s41565-021-01041-9

Balch, H. B. et al. Graphene Electric Field Sensor Enables Single Shot Label-Free Imaging of Bioelectric Potentials. Nano Lett 21, 4944-4949 (2021). https://doi.org:10.1021/acs.nanolett.1c00543

Aslam, N. et al. Quantum sensors for biomedical applications. Nat Rev Phys 5, 157-169 (2023). https://doi.org:10.1038/s42254-023-00558-3

Sainz-Urruela, C., Vera-Lopez, S., San Andres, M. P. & Diez-Pascual, A. M. Graphene-Based Sensors for the Detection of Bioactive Compounds: A Review. Int J Mol Sci 22, 3316 (2021). https://doi.org:10.3390/ijms22073316

Mummareddy, S., Pradhan, S., Narasimhan, A. K. & Natarajan, A. On Demand Biosensors for Early Diagnosis of Cancer and Immune Checkpoints Blockade Therapy Monitoring from Liquid Biopsy. Biosensors (Basel) 11, 500 (2021). https://doi.org:10.3390/bios11120500

Pallares, R. M., Kiessling, F. & Lammers, T. Clinical translation of quantum dots. Nanomedicine (Lond) 19, 2433-2435 (2024). https://doi.org:10.1080/17435889.2024.2405458

Quantum sensing: Poised to realize immense potential in many sectors, <https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/tech-forward/quantum-sensing-poised-to-realize-immense-potential-in-many-sectors> (2024).

Leenen, R. C. A. et al. Prostate Cancer Early Detection in the European Union and UK. Eur Urol 87, 326-339 (2025). https://doi.org:10.1016/j.eururo.2024.07.019

Wigglesworth, S. et al. The incidence and prevalence of epilepsy in the United Kingdom 2013-2018: A retrospective cohort study of UK primary care data. Seizure 105, 37-42 (2023). https://doi.org:10.1016/j.seizure.2023.01.003

Cancer Statistics for the UK, <https://www.cancerresearchuk.org/health-professional/cancer-statistics-for-the-uk> (2020).

Heart Statistics - BHF Statistics Factsheet - UK, <https://www.bhf.org.uk/what-we-do/our-research/heart-statistics> (2024).

Krishnamoorthy, H. N. S., Dubrovkin, A. M., Adamo, G. & Soci, C. Topological Insulator Metamaterials. Chem Rev 123, 4416-4442 (2023). https://doi.org:10.1021/acs.chemrev.2c00594

Zhou, Q., Qiu, Q. & Huang, Z. Graphene-based terahertz optoelectronics. Optics & Laser Technology 157 (2023). https://doi.org:10.1016/j.optlastec.2022.108558

Delfanazari, K., Klemm, R. A., Joyce, H. J., Ritchie, D. A. & Kadowaki, K. Integrated, Portable, Tunable, and Coherent Terahertz Sources and Sensitive Detectors Based on Layered Superconductors. Proc. IEEE 108, 721-734 (2020). https://doi.org:10.1109/jproc.2019.2958810

Terahertz technology for non-destructive evaluation | TeraView | Pharmaceutical, <https://teraview.com/pharmaceutical/> (2025).

Terahertz technologies - NPL, <https://www.npl.co.uk/research/electromagnetics/terahertz-radiation> (2025).

IBM Security Report: Cost of a Data Breach for UK Businesses Averages £3.4m, <https://uk.newsroom.ibm.com/24-07-2023-IBM-Security-Report-Cost-of-a-Data-Breach-for-UK-Businesses-Averages-3-4m> (2023).

NCSC Annual Review 2024, <https://www.ncsc.gov.uk/collection/ncsc-annual-review-2024> (2024).

1.5 million UK businesses hit by cybercrime in 2023, <https://techinformed.com/1-5-million-uk-businesses-hit-by-cybercrime-in-2023/> (2024).

NCSC proposes three-step plan to move to quantum-safe encryption | Computer Weekly, <https://www.computerweekly.com/news/366621031/NCSC-proposes-three-step-plan-to-move-to-quantum-safe-encryption> (2025).

Timelines for migration to post-quantum cryptography, <https://www.ncsc.gov.uk/guidance/pqc-migration-timelines> (2025).

About the Hub - Quantum Communications Hub, <https://www.quantumcommshub.net/research-community/about-the-hub/> (2024).

Bao, C., Tang, P., Sun, D. & Zhou, S. Light-induced emergent phenomena in 2D materials and topological materials. Nature Reviews Physics 4, 33-48 (2021). https://doi.org:10.1038/s42254-021-00388-1

Gao, T., von Helversen, M., Antón-Solanas, C., Schneider, C. & Heindel, T. Atomically-thin single-photon sources for quantum communication. npj 2D Materials and Applications 7, 1-9 (2023). https://doi.org:10.1038/s41699-023-00366-4

Chen, C. et al. Wavelength-tunable high-fidelity entangled photon sources enabled by dual Stark effects. Nat Commun 15, 5792 (2024). https://doi.org:10.1038/s41467-024-50062-0

Quantum Tech - Nanoco, <https://www.nanocotechnologies.com/products-markets/innovation/quantum-tech/> (2025).

Liao, S. K. et al. Satellite-to-ground quantum key distribution. Nature 549, 43-47 (2017). https://doi.org:10.1038/nature23655

Luo, H., Yu, P., Li, G. & Yan, K. Topological quantum materials for energy conversion and storage. Nature Reviews Physics 4, 611-624 (2022). https://doi.org:10.1038/s42254-022-00477-9

Nahirniak, S., Ray, A. & Saruhan, B. Challenges and Future Prospects of the MXene-Based Materials for Energy Storage Applications. Batteries 9, 126 (2023). https://doi.org:10.3390/batteries9020126

Chy, M. N. U., Rahman, M. A., Kim, J. H., Barua, N. & Dujana, W. A. MXene as Promising Anode Material for High-Performance Lithium-Ion Batteries: A Comprehensive Review. Nanomaterials (Basel) 14, 616 (2024). https://doi.org:10.3390/nano14070616

Clean Power 2030 Action Plan, <https://www.gov.uk/government/publications/clean-power-2030-action-plan> (2024).

Ji, Y. et al. Surface Engineering Enables Efficient AgBiS(2) Quantum Dot Solar Cells. Nano Lett 24, 10418-10425 (2024). https://doi.org:10.1021/acs.nanolett.4c00959

Aqoma, H. et al. Alkyl ammonium iodide-based ligand exchange strategy for high-efficiency organic-cation perovskite quantum dot solar cells. Nature Energy 9, 324-332 (2024). https://doi.org:10.1038/s41560-024-01450-9

Wang, Y. et al. Hydrogen production with ultrahigh efficiency under visible light by graphene well-wrapped UiO-66-NH2 octahedrons. J. Mater. Chem. A 5, 20136-20140 (2017). https://doi.org:10.1039/c7ta06341e

Jiang, H. et al. Single atom catalysts in Van der Waals gaps. Nat Commun 13, 6863 (2022). https://doi.org:10.1038/s41467-022-34572-3

Deng, D. et al. Catalysis with two-dimensional materials and their heterostructures. Nat Nanotechnol 11, 218-230 (2016). https://doi.org:10.1038/nnano.2015.340

Energy bills support: an update - NAO report, <https://www.nao.org.uk/reports/energy-bills-support-an-update/> (2024).

Zaanen, J. Superconductivity: why the temperature is high. Nature 430, 512-513 (2004). https://doi.org:10.1038/430512a

Peotta, S., Huhtinen, K.-E. & Törmä, P. Quantum geometry in superfluidity and superconductivity. arXiv (2023). https://arxiv.org/abs/2308.08248

Hussey, N. E. High-temperature superconductivity and strange metallicity: Simple observations with (possibly) profound implications. Physica C: Superconductivity and its Applications 614 (2023). https://doi.org:10.1016/j.physc.2023.1354362

Ghazaryan, A., Holder, T., Berg, E. & Serbyn, M. Multilayer graphenes as a platform for interaction-driven physics and topological superconductivity. Phys. Rev. B 107 (2023). https://doi.org:10.1103/PhysRevB.107.104502

Wang, Z., Liu, C., Liu, Y. & Wang, J. High-temperature superconductivity in one-unit-cell FeSe films. J Phys Condens Matter 29, 153001 (2017). https://doi.org:10.1088/1361-648X/aa5f26

Han, X.-Q. et al. InvDesFlow: An AI search engine to explore possible high-temperature superconductors. arXiv.2409.08065 (2024). https://doi.org:10.48550/arXiv.2409.08065

Renaissance Philanthropy Playbook: Common task method – A brighter future for all through science, technology, and innovation, <https://renaissancephilanthropy.org/playbooks/common-task-method/> (2024).

Eric Jones, M. H., Colin McNeece, Amir Khalighi, Roger Bonnecaze. The 1000x Lab: Accelerating Materials Science and Chemistry R&D, <https://www.enthought.com/blog/the-1000x-lab/> (2020).

Jumper, J. et al. Highly accurate protein structure prediction with AlphaFold. Nature 596, 583-589 (2021). https://doi.org:10.1038/s41586-021-03819-2

Volk, A. A. et al. AlphaFlow: autonomous discovery and optimization of multi-step chemistry using a self-driven fluidic lab guided by reinforcement learning. Nat Commun 14, 1403 (2023). https://doi.org:10.1038/s41467-023-37139-y

Cheetham, A. K., Seshadri, R. & Wudl, F. Chemical synthesis and materials discovery. Nature Synthesis 1, 514-520 (2022). https://doi.org:10.1038/s44160-022-00096-3

Szymanski, N. J. et al. An autonomous laboratory for the accelerated synthesis of novel materials. Nature 624, 86-91 (2023). https://doi.org:10.1038/s41586-023-06734-w

Rao, Z. et al. Machine learning-enabled high-entropy alloy discovery. Science 378, 78-85 (2022). https://doi.org:10.1126/science.abo4940

MacLeod, B. P. et al. Self-driving laboratory for accelerated discovery of thin-film materials. Sci Adv 6, eaaz8867 (2020). https://doi.org:10.1126/sciadv.aaz8867

Gurnani, R. et al. AI-assisted discovery of high-temperature dielectrics for energy storage. Nat Commun 15, 6107 (2024). https://doi.org:10.1038/s41467-024-50413-x

NIST High Temp. Superconducting Materials (HTS) Database, <https://srdata.nist.gov/CeramicDataPortal/hit> (1996).

HTEM DB (High Throughput Experimental Materials Database), <https://htem.nrel.gov/about> (2017).

Skolnick, J., Gao, M., Zhou, H. & Singh, S. AlphaFold 2: Why It Works and Its Implications for Understanding the Relationships of Protein Sequence, Structure, and Function. J Chem Inf Model 61, 4827-4831 (2021). https://doi.org:10.1021/acs.jcim.1c01114

Zhang, Y., Hubner, I. A., Arakaki, A. K., Shakhnovich, E. & Skolnick, J. On the origin and highly likely completeness of single-domain protein structures. Proc Natl Acad Sci U S A 103, 2605-2610 (2006). https://doi.org:10.1073/pnas.0509379103

Griesemer, S. D., Ward, L. & Wolverton, C. High-throughput crystal structure solution using prototypes. Physical Review Materials 5 (2021). https://doi.org:10.1103/PhysRevMaterials.5.105003

Fu, J. et al. Symmetry classification of 2D materials: layer groups versus space groups. 2D Materials 11 (2024). https://doi.org:10.1088/2053-1583/ad3e0c

Zhou, J. et al. 2DMatPedia, an open computational database of two-dimensional materials from top-down and bottom-up approaches. Sci Data 6, 86 (2019). https://doi.org:10.1038/s41597-019-0097-3

Mounet, N. et al. Two-dimensional materials from high-throughput computational exfoliation of experimentally known compounds. Nat Nanotechnol 13, 246-252 (2018). https://doi.org:10.1038/s41565-017-0035-5

Gjerding, M. N. et al. Recent progress of the Computational 2D Materials Database (C2DB). 2D Materials 8, 044002-044002 (2021). https://doi.org:10.1088/2053-1583/ac1059

The Nobel Turing Challenge, <https://www.nobelturingchallenge.org/home> (2025).

Munyebvu, N., Lane, E., Grisan, E. & Howes, P. D. Accelerating colloidal quantum dot innovation with algorithms and automation. Materials Advances 3, 6950-6967 (2022). https://doi.org:10.1039/d2ma00468b

Wu, T. et al. Self-driving lab for the photochemical synthesis of plasmonic nanoparticles with targeted structural and optical properties. Nat Commun 16, 1473 (2025). https://doi.org:10.1038/s41467-025-56788-9

Bateni, F. et al. Smart Dope: A Self‐Driving Fluidic Lab for Accelerated Development of Doped Perovskite Quantum Dots. Advanced Energy Materials 14 (2023). https://doi.org:10.1002/aenm.202302303

Volk, A. A. & Abolhasani, M. Performance metrics to unleash the power of self-driving labs in chemistry and materials science. Nat Commun 15, 1378 (2024). https://doi.org:10.1038/s41467-024-45569-5

Tom, G. et al. Self-Driving Laboratories for Chemistry and Materials Science. Chem Rev 124, 9633-9732 (2024). https://doi.org:10.1021/acs.chemrev.4c00055

Lo, S. et al. Review of low-cost self-driving laboratories in chemistry and materials science: the “frugal twin” concept. Digital Discovery 3, 842-868 (2024). https://doi.org:10.1039/d3dd00223c

Marblestone, A. et al. Unblock research bottlenecks with non-profit start-ups. Nature 601, 188-190 (2022). https://doi.org:10.1038/d41586-022-00018-5

Mosca, R., De Santo, M. & Gaeta, R. Ontology learning from relational database: a review. Journal of Ambient Intelligence and Humanized Computing 14, 16841-16851 (2023). https://doi.org:10.1007/s12652-023-04693-8

Durmaz, A. R., Thomas, A., Mishra, L., Murthy, R. N. & Straub, T. An ontology-based text mining dataset for extraction of process-structure-property entities. Sci Data 11, 1112 (2024). https://doi.org:10.1038/s41597-024-03926-5

Ye, Y. et al. Construction and Application of Materials Knowledge Graph in Multidisciplinary Materials Science via Large Language Model. arXiv.2404.03080 (2024). https://doi.org:10.48550/arXiv.2404.03080

Du, R., An, H., Wang, K. & Liu, W. A Short Review for Ontology Learning: Stride to Large Language Models Trend. arXiv.2404.14991 (2024). https://doi.org:10.48550/arXiv.2404.14991

Jain, A. et al. Commentary: The Materials Project: A materials genome approach to accelerating materials innovation. APL Materials 1 (2013). https://doi.org:10.1063/1.4812323

Inorganic Crystal Structure Database (ICSD), <https://www.psds.ac.uk/icsd> (2021).

Montblanch, A. R., Barbone, M., Aharonovich, I., Atature, M. & Ferrari, A. C. Layered materials as a platform for quantum technologies. Nat Nanotechnol 18, 555-571 (2023). https://doi.org:10.1038/s41565-023-01354-x